Mingze Wang

|

Mingze Wang (王铭泽) School of Mathematical Sciences 210, Jingyuan Building #6 (静园六院), Peking University Email: mingzewang [at] stu [dot] pku [dot] edu [dot] cn [Google Scholar] [CV] |

About me

I am a final-year Ph.D candidate in Computational Mathematics, School of Mathematical Sciences, Peking University (2021-Present). I am very fortunate to be advised by Prof. Weinan E. Prior to that, I received my B.S. degree in Pure and Applied Mathematics (ranking 1/111 for the first three years during my undergraduate study) from School of Mathematical Sciences, Zhejiang University, Hangzhou, China in 2021.

Please feel free to drop me an email if you are interested in collaborating with me.

News

[2025.11] I won the 2025 ByteDance Scholarship (awarded to 20 students in China and Singapore); my advisor received the Best Mentor Award.

[2025.09] One paper accepted to NeurIPS 2025 as a Spotlight (top 3.5%).

[2025.05] One paper accepted to ICML 2025.

[2025.01] One paper accepted to ICLR 2025 as a Spotlight (top 5.1%).

[2024.12] I received support from the Young Scientists (Ph.D) Fund of the National Natural Science Foundation of China.

[2024.09] I won the 2024 China National Scholarship (top 0.2% in the nation).

[2024.09] Three papers accepted to NeurIPS 2024.

[2024.05] One paper accepted to ICML 2024. One paper accepted to ACL 2024.

[2023.11] I won the 2023 BICMR Mathematical Award for Graduate Students (top 1%).

[2023.09] One paper accepted to NeurIPS 2023 as a Spotlight (top 3.5%).

[2022.11] I have passed the Ph.D. qualifying exam.

[2022.10] I won the 2022 PKU Academic Innovation Award (top 1%).

[2022.09] Two papers accepted to NeurIPS 2022.

Research Interests

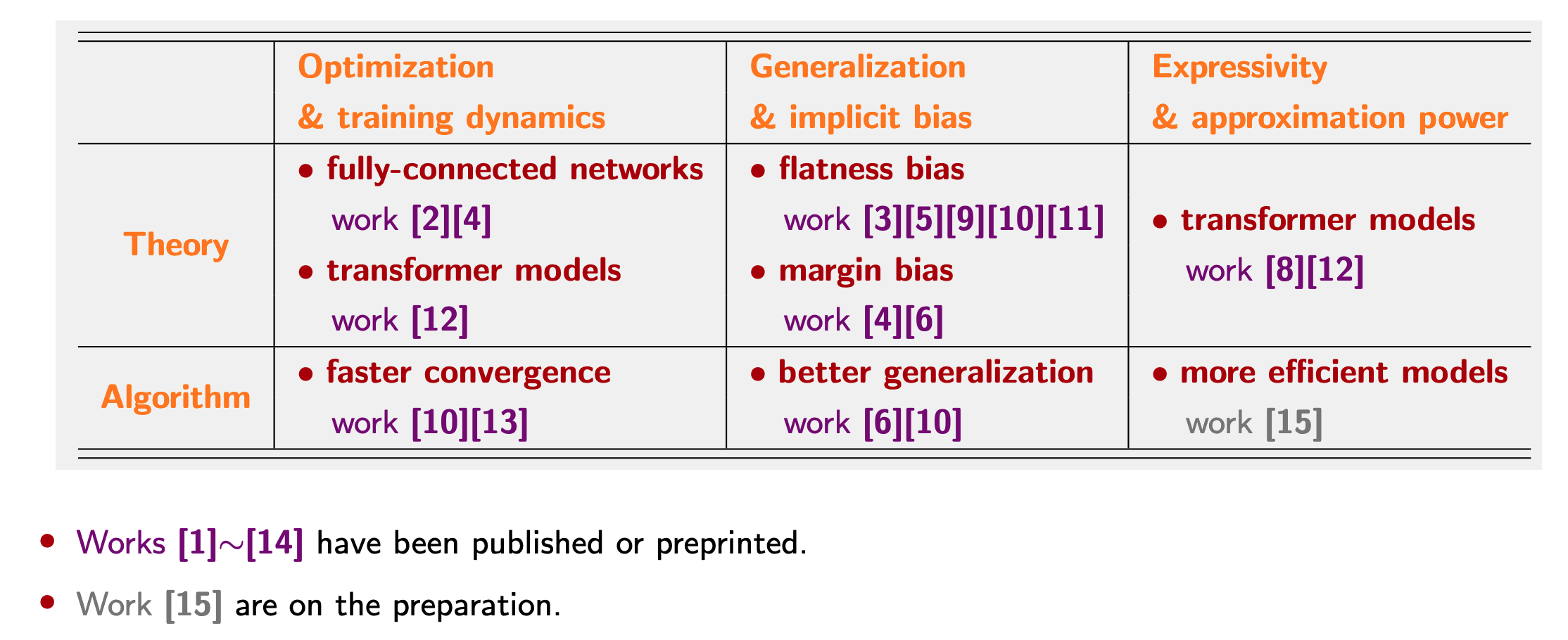

I am broadly interested in theory, algorithm and application of machine learning. I am also interested in non-convex and convex optimization.

Recently, I am dedicated to use theory to design algorithms elegantly.

My recent research topics are

Deep Learning Theory: expressivity, optimization, generalization, implicit bias. [1][2][3][4][5][6][8][9][10][11][12][13][14][15][16][17][18][19][20]

Transformer and Large Language Model: theory and algorithm, especially in LLM pre-training. [8][10][12][13][16][17][18][19][20]

Non-convex and Convex Optimization: theory and algorithm. [2][4][6][10][11][12][13][14][15][18][19]

Specifically, my research on deep learning theory and algorithm can be summarized as:

|

My current research is supported the Young Scientists (Ph.D) Fund of the National Natural Science Foundation of China. (Analyzing and Improving the Adam Optimizer for Foundation Model Training)

Recent Publications and Preprints

* indicates equal contribution, † means project lead.

[20] Low-probability Tokens Sustain Exploration in Reinforcement Learning with Verifiable Reward

Guanhua Huang*, Tingqiang Xu*, Mingze Wang, Qi Yi, Xue Gong, Siheng Li, Ruibin Xiong, Kejiao Li, Yuhao Jiang, Bo Zhou.

under review, arXiv preprint, 1-21. Sep 2025.[19] Towards Revealing the Effect of Batch Size Scheduling on Pre-training

Jinbo Wang*, Binghui Li*, Zhanpeng Zhou, Mingze Wang, Yuxuan Sun, Jiaqi Zhang, Xunliang Cai, Lei Wu.

under review. Sep 2025.[18] GradPower: Powering Gradients for Faster Language Model Pre-Training

Mingze Wang*†, Jinbo Wang*, Jiaqi Zhang, Wei Wang, Peng Pei, Xunliang Cai, Weinan E, Lei Wu.

under review, arXiv preprint, 1-22. May 2025.[17] A Mechanistic Study of Transformer Training Instability under Mixed Precision

Shengtao Guo, Mingze Wang, Jinbo Wang, Lei Wu.

under review. May 2025.[16] On the Expressive Power of Mixture-of-Experts for Structured Complex Tasks

Mingze Wang†, Weinan E.

2025 Conference on Neural Information Processing Systems (NeurIPS 2025) (Spotlight, top 3.5%), 1-18.[15] On the Learning Dynamics of Two-layer Linear Networks with Label Noise SGD

Tongcheng Zhang, Zhanpeng Zhou, Mingze Wang, Andi Han, Wei Huang, Taiji Suzuki, Junchi Yan.

2026 AAAI Conference on Artificial Intelligence (AAAI 2026) (Oral).[14] A Single Global Merging Suffices: Recovering Centralized Learning Performance in Decentralized Learning

Tongtian Zhu, Tianyu Zhang, Mingze Wang, Zhanpeng Zhou, Can Wang.

ICLR 2025 Workshop on Weight Space Learning (ICLR 2025 - WSL), 1-23.[13] The Sharpness Disparity Principle in Transformers for Accelerating Language Model Pre-Training

Jinbo Wang*, Mingze Wang*†, Zhanpeng Zhou*, Junchi Yan, Weinan E, Lei Wu.

2025 International Conference on Machine Learning (ICML 2025), 1-23.[12] How Transformers Get Rich: Approximation and Dynamics Analysis

Mingze Wang†, Ruoxi Yu, Weinan E, Lei Wu.

ICML 2025 Workshop on High-dimensional Learning Dynamics (ICML 2025 - HiLD), 1-47.[11] Sharpness-Aware Minimization Efficiently Selects Flatter Minima Late in Training

Zhanpeng Zhou*, Mingze Wang*, Yuchen Mao, Bingrui Li, Junchi Yan.

2025 International Conference on Learning Representations (ICLR 2025) (Spotlight, top 5.1%), 1-31.[10] Improving Generalization and Convergence by Enhancing Implicit Regularization

Mingze Wang†, Jinbo Wang, Haotian He, Zilin Wang, Guanhua Huang, Feiyu Xiong, Zhiyu Li, Weinan E, Lei Wu

2024 Conference on Neural Information Processing Systems (NeurIPS 2024), 1-44.[9] Loss Symmetry and Noise Equilibrium of Stochastic Gradient Descent

Liu Ziyin, Mingze Wang, Hongchao Li, Lei Wu

2024 Conference on Neural Information Processing Systems (NeurIPS 2024), 1-26.[8] Understanding the Expressive Power and Mechanisms of Transformer for Sequence Modeling

Mingze Wang, Weinan E

2024 Conference on Neural Information Processing Systems (NeurIPS 2024), 1-76.[7] Are AI-Generated Text Detectors Robust to Adversarial Perturbations?

Guanhua Huang, Yuchen Zhang, Zhe Li, Yongjian You, Mingze Wang, Zhouwang Yang

2024 Annual Meeting of the Association for Computational Linguistics (ACL 2024), 1-20.[6] Achieving Margin Maximization Exponentially Fast via Progressive Norm Rescaling

Mingze Wang†, Zeping Min, Lei Wu

2024 International Conference on Machine Learning (ICML 2024), 1-38.[5] A Theoretical Analysis of Noise Geometry in Stochastic Gradient Descent

Mingze Wang, Lei Wu

NeurIPS 2023 Workshop on Mathematics of Modern Machine Learning (NeurIPS 2023 - M3L), 1-30.[4] Understanding Multi-phase Optimization Dynamics and Rich Nonlinear Behaviors of ReLU Networks

Mingze Wang†, Chao Ma

2023 Conference on Neural Information Processing Systems (NeurIPS 2023) (Spotlight, top 3.5%), 1-94.[3] The alignment property of SGD noise and how it helps select flat minima: A stability analysis

Lei Wu, Mingze Wang, Weijie J. Su

2022 Conference on Neural Information Processing Systems (NeurIPS 2022), 1-25.[2] Early Stage Convergence and Global Convergence of Training Mildly Parameterized Neural Networks

Mingze Wang†, Chao Ma

2022 Conference on Neural Information Processing Systems (NeurIPS 2022), 1-73.[1] Generalization Error Bounds for Deep Neural Networks Trained by SGD

Mingze Wang†, Chao Ma

arXiv preprint, 1-32, June 2022.

Selected Awards and Honours

ByteDance Scholarship (awarded to 20 students in China and Singapore); my advisor received the Best Mentor Award, 2025.

Young Scientists (Ph.D) Fund of the National Natural Science Foundation of China (¥300,000), 2024.

China National Scholarship (top 0.2% in the nation), The Ministry of Education, 2024.

Principal Scholarship, Peking University, 2024; 2025.

BICMR Mathematical Award for Graduate Students (top 1%), Peking University, 2023.

PKU Academic Innovation Award (top 1%), Peking University, 2022.

Outstanding Graduate of Zhejiang Province (top 5%), Zhejiang Province, 2021.

First Class Scholarship of ZJU (top 3%), Zhejiang University, 2019; 2020.

China National Scholarship (top 0.2% in the nation), The Ministry of Education, 2019.